This is the third part of my blog series on fitting the 4-parameter Wiener model with brms. The first part discussed how to set up the data and model. The second part was concerned with (mostly graphical) model diagnostics and the assessment of the adequacy (i.e., the fit) of the model. This third part will inspect the parameter estimates of the model with the goal of determining whether there is any evidence for differences between the conditions. As before, this part is completely self sufficient and can be run without running the code of Parts I or II.

As I promised in the second part of this series of blog posts, the third part did not take another two months to appear. No, this time it took almost eight month. I apologize for this, but we all know the planning fallacy and a lot of more important things got into the way (e.g., teaching).

As this part is relatively long, I will provide a brief overview. The next section contains a short explanation for the way in which we will perform hypothesis testing. This is followed by a short section loading some packages and the fitted model object and giving a small recap of the model. After this comes one relatively long section looking at the drift rate parameters in various ways. Then we will take look at each of the other three parameters in turn. Of especial importance will be the subsection on the non-decision time. As described in more detail below, I believe that this parameter cannot be interpreted. In the end, I give a brief overview of some of the limits of the present model and how it could be improved upon.

Bayesian Hypothesis Testing

The goal of this post is to provide evidence for differences in parameter estimates between conditions. This posts will present different ways to do so. Importantly, different ways of how to produce such evidence is only meant in the technical sense. In statistical terms we will always do basically the same thing: inspect difference distributions resulting from linear combinations of cell-wise posterior distributions of the group-level model parameter estimates. The somewhat technical phrase “linear combinations of cell-wise posterior distributions” often simply means the difference between two distributions. For example, the difference distribution resulting from subtracting the posterior of the speed condition from the posterior of the accuracy condition.

As a reminder, a posterior distribution is the probability distribution of the parameter conditional on data and model (where the latter includes the parameter priors). It answers the question which parameters are likely given our prior knowledge and the data. Therefore, the posterior distribution of the difference answers, for example, which difference values between two conditions are likely or not. With such a difference distribution we can then do two things.

First, we can check whether the x%-highest posterior density (HPD) or credibility interval of this difference distribution includes 0. If 0 is within the 95% HPD interval it could be seen a plausible value. If 0 is outside the 95% interval we could regard it as not plausible enough and would conclude that there is evidence for a difference.

Second, we can evaluate how much of the difference distribution is on one side of 0. If this value is considerably away from 50%, this constitutes evidence for a difference. For example, if all of the posterior samples for a specific difference are larger than zero, this provides considerable evidence that the difference is above 0.

The approach of investigating posterior distributions to gauge differences between conditions is only one approach for hypothesis testing in a Bayesian setting. And, at least in the psychological literature, it is not the most popular one. More specifically, many of the more vocal proponents of Bayesian statistics in the psychological literature advocate hypothesis testing using Bayes factors (e.g., ). One prominent exception to this rule in psychology is maybe John . However, he proposes yet another approach of inference based on posterior distributions as used here. In general, I agree with many of the argument pro Bayes factor, especially in cases as the current one in which all relevant hypothesis or competing models are nested within one large (super) model.

The main difficulty when using Bayes factors is their extreme sensitivity to the parameter priors. In a situation with nested models, this is in principle not such a big problem, because one could use Jeffrey’s default prior approach (e.g., ). have extended this approach to general ANOVA designs (I am sure they were not the first to have this idea, but they were at least the first to popularize this idea in psychology). Quentin Gronau and colleagues have applied it to accumulator models, including the diffusion model. The general idea is to reparameterize the model using effect parameters which are normalized using, for example, the residual variance. For example, for a two sample design parameterize the model using a standardized difference such as Cohen’s d. Then it is comparatively easy and uncontroversial to put a prior on the standardized effect size measure. In the present case, in which the model does not contain a residual variance parameter, one could use the variance estimate of the group-level distribution for each parameter for such a normalization.

Unfortunately, brms does to the best of my knowledge not contain the ability to specify a parameterization and prior distribution in line with Jeffrey’s default Bayes factor. And as far as I remember a discussion I had on this topic with Paul Bürkner some time ago, it is also unlikely brms will ever get this ability. Consequently, I feel that brms is not the right tool for model selection using Bayes factors. Whereas it offers this ability now from a technical side (using our bridgesampling package), it only allows models with an unnormalized parameterization. I believe that such a parameterization is in most cases not appropriate for Bayes factors based model selection as the priors cannot be specified in a ‘default’ manner. Thus, I cannot recommend brms for Bayes factor based model selection at the moment. In sum, the reason for basing our inferences solely on posterior distributions in the present case is practical constraints and not philosophical considerations.

One final word of caution for the psychological readership. Whereas Bayes factors are clearly extremely popular in psychology, this is not the case in many other scientific disciplines. For example, the patron saint of applied Bayesian statistics, Andrew Gelman, is a self-declared opponent of Bayes factors: “I generally hate Bayes factors myself”. As far as I can see, this disagreement comes from the different type of data different people work with. When working with observational (or correlational) data, as Andrew Gelman usually does, tests of the presence of effects (or of nullity) are either a big no-no (e.g., when wanting to do causal inference) or simply not interesting. We know that the real world is full of relationships, especially small ones, between arbitrary things. So getting effects simply by increasing N is just not interesting and estimation is the more interesting approach. In contrast, for experimental data, we often have true null hypothesis and testing of those makes a lot of sense. For example, if Bem was right and there truly were PSI, we could surely exploit this somehow. But as far as we can tell, the effect is truly null. In this case we really need ypothesis testing.

Getting Started

We start with loading some packages for analyzing the posterior. Since the beginning of this series, I have more and more become a fan of the whole tidyverse, so we import it completely. We of course also need brms. As shown below, we will need a few more packages (especially emmeans and tidybayes), but these are only loaded when needed.

library("brms")

library("tidyverse")

theme_set(theme_classic()) # theme for ggplot2

options(digits = 3)

Then we will also need the posterior samples, we can load them in the same way as before from my github page. Note that we neither need the data nor the posterior predictive distribution this time.

tmp <- tempdir()

download.file("https://singmann.github.io/files/brms_wiener_example_fit.rda",

file.path(tmp, "brms_wiener_example_fit.rda"))

load(file.path(tmp, "brms_wiener_example_fit.rda"))

We begin with looking at the group-level posteriors. An overview of their posterior distributions can be obtained using the summary function.

# Estimate Est.Error l-95% CI u-95% CI # conditionaccuracy:frequencyhigh -2.944 0.1971 -3.345 -2.562 # conditionspeed:frequencyhigh -2.716 0.2135 -3.125 -2.299 # conditionaccuracy:frequencynw_high 2.238 0.1429 1.965 2.511 # conditionspeed:frequencynw_high 1.989 0.1785 1.626 2.332 # bs_conditionaccuracy 1.898 0.1448 1.610 2.186 # bs_conditionspeed 1.357 0.0813 1.200 1.525 # ndt_conditionaccuracy 0.323 0.0173 0.289 0.358 # ndt_conditionspeed 0.262 0.0154 0.232 0.293 # bias_conditionaccuracy 0.471 0.0107 0.449 0.491 # bias_conditionspeed 0.499 0.0127 0.474 0.524 # Warning message: # There were 7 divergent transitions after warmup. Increasing adapt_delta above 0.8 may help. # See http://mc-stan.org/misc/warnings.html#divergent-transitions-after-warmup

As a reminder, we have data from a lexical decision task (i.e., participants have to decide whether presented strings are a word or not) and frequency is the factor determining the true status of a string, with high referring to words and nw_high to non-words. Consequently, for the drift rate (the first four rows in the results table) the frequency factor determines the sign of the parameter estimates with the drift rate for words (rows 1 and 2) being clearly negative (i.e., those trials mostly hit the lower boundary for the word decision) and the drift rate for non-words (rows 3 and 4) being clearly positive (i.e., those trials mostly hit the upper boundary for non-word decisions). Furthermore, there could be differences between the drift rates in the accuracy or speed conditions. Specifically, in the speed conditions drift rates seem to be less extreme (i.e., nearer to 0) compared to the accuracy conditions.

The other three parameters, only differ between the condition factor. Given the experimental manipulation of accuracy versus speed condition, we expect differences for the boundary separation, parameters starting with bs_. For the non-decision time, parameters starting with ndt_, there also appears to be a small effect as the 95% only overlap slightly. However, as discussed in detail below, we should be careful in interpreting this difference. Finally, for bias, parameters starting with bias_, there might be a difference or not. Furthermore, at least in the accuracy condition there appears to be a bias for “word” responses.

One way to test differences between conditions is using the hypothesis function in brms. However, I was not able to get it to work with the current model. I suspect the reason for this is the somewhat unconventional parameterizations where each cell gets one parameter (in some sense each cell has its own intercept, but there is no overall intercept). This contrasts with a more “standard” parameterization in which there is one intercept (for either the unweighted means or one of the cells) and the remaining parameters capture the differences between the intercept and the cell means. As a reminder, I chose this unconventional parameterization in the first place to make the specification of the parameters priors easier. Additionally, this is a common parameterization when programming cognitive models by hand.

emmeans and tidybayes: Differences in the Drift Rate

An alternative is to use the great emmeans package by Russel Lenth. I am a huge fan of emmeans and use it all the time when using “normal” statistical models (e.g., ANOVAs, mixed models), independent of whether I use frequentist methods (e.g., via afex) or Bayesian methods (e.g., rstanarm or brms). Unfortunately, it appears as if emmeans at the moment only allows an analysis of the main parameter of the response distribution for models estimated with brms, which in our case is the drift rate. If someone were to extend emmeans to allow using brms models with all parameters, I would be very happy and thankful. In any case, I highly recommend to check out the emmeans vignettes to get an overview of what type of follow-up tests are all possible with this great package.

As I recently learned, emmeans works quite nicely together with tidybayes, a package that enables working with posterior draws within the tidyverse. tidybayes has a surprisingly large package footprint (i.e., it imports quite a lot of other packages) for a package with a comparatively small functionality. I guess this is a consequence of being embedded within the tidyverse. In any case, many of the imported packages are already in the search path thanks to loading the tidyverse above and attaching should not take that long here.

library("emmeans")

library("tidybayes")

We begin with emmeans only to assure ourselves that it works as expected. For this, we get the estimated marginal means plus 95%-highest posterior density (HPD) intervals which match the output of the fixed effects for the estimate of the central tendency (which is the median of the posterior samples in both cases). As a reminder, the fact that the cell estimates match the parameter estimates is of course a consequence of the unusual parameterization which is picked up correctly by emmeans. The lower and upper bounds of the intervals differ slightly between the summary output from brms and emmeans, a consequence of using different ways of calculating the intervals (i.e., quantiles versus HPD intervals).

fit_wiener %>% emmeans( ~ condition*frequency) # condition frequency emmean lower.HPD upper.HPD # accuracy high -2.94 -3.34 -2.56 # speed high -2.72 -3.10 -2.28 # accuracy nw_high 2.24 1.96 2.50 # speed nw_high 1.99 1.64 2.34 # # HPD interval probability: 0.95

Using HPD Intervals And Histograms

As a first test, we are interested in assessing whether there is evidence for a difference between speed and accuracy conditions for both words (i.e., frequency = high) and non-words (i.e., frequency = nw_high). There are many ways to do this with emmeans one of them is via the by argument and the pairs function.

fit_wiener %>%

emmeans("condition", by = "frequency") %>%

pairs

# frequency = high:

# contrast estimate lower.HPD upper.HPD

# accuracy - speed -0.225 -0.6964 0.256

#

# frequency = nw_high:

# contrast estimate lower.HPD upper.HPD

# accuracy - speed 0.249 -0.0647 0.550

#

# HPD interval probability: 0.95

Here, we do not have a lot of evidence that there is a difference for either stimulus type, as both HPD intervals include 0.

Instead of getting the summary of the distribution via emmeans, we can also use the capabilities of tidybayes and extract the samples in a tidy way. Then we use one of the convenient aggregation functions coming with tidybayes and aggregate the samples based on the same conditioning variable. After trying a few different options, I have the feeling that emmeans‘ hpd.summary() function uses the same approach for calculating HPD intervals as tidybayes, as both results match.

samp1 <- fit_wiener %>%

emmeans("condition", by = "frequency") %>%

pairs %>%

gather_emmeans_draws()

samp1 %>%

median_hdi()

# # A tibble: 2 x 8

# # Groups: contrast [1]

# contrast frequency .value .lower .upper .width .point .interval

# <fct> <fct> <dbl> <dbl> <dbl> <dbl> <chr> <chr>

# 1 accuracy - speed high -0.225 -0.696 0.256 0.95 median hdi

# 2 accuracy - speed nw_high 0.249 -0.0647 0.550 0.95 median hdi

Instead of the median, we can also use the mode as our point estimate. In the present case the differences between both are not large but noticeable for the word stimuli.

samp1 %>% mode_hdi() # # A tibble: 2 x 8 # # Groups: contrast [1] # contrast frequency .value .lower .upper .width .point .interval # <fct> <fct> <dbl> <dbl> <dbl> <dbl> <chr> <chr> # 1 accuracy - speed high -0.190 -0.696 0.256 0.95 mode hdi # 2 accuracy - speed nw_high 0.252 -0.0647 0.550 0.95 mode hdi

Further, we might use a different way for calculating HPD intervals. I have the feeling, Rob Hyndman’s hdrcde package provides the most elaborated set of functions for estimating highest density intervals. Consequently, this is what we use next. Note that the package need to be installed for that.

To use it in a tidy way, we write a short function returning a data.frame in a list. Thus, when called within summarise we get a list-column. Consequently, we have to call unnest to get a nice output.

get_hdi <- function(x, level = 95) {

tmp <- hdrcde::hdr(x, prob = level)

list(data.frame(mode = tmp$mode[1], lower = tmp$hdr[1,1], upper = tmp$hdr[1,2]))

}

samp1 %>%

summarise(hdi = get_hdi(.value)) %>%

unnest

# # A tibble: 2 x 5

# # Groups: contrast [1]

# contrast frequency mode lower upper

# <fct> <fct> <dbl> <dbl> <dbl>

# 1 accuracy - speed high -0.227 -0.712 0.247

# 2 accuracy - speed nw_high 0.249 -0.0616 0.558

The results differ again slightly, but not too much. Perhaps more importantly, there is still no real evidence for a difference in the drift rate between conditions. Even when looking only at 80% HPD intervals there is only evidence for a difference for the non-word stimuli.

samp1 %>% summarise(hdi = get_hdi(.value, level = 80)) %>% unnest # # A tibble: 2 x 5 # # Groups: contrast [1] # contrast frequency mode lower upper # <fct> <fct> <dbl> <dbl> <dbl> # 1 accuracy - speed high -0.212 -0.540 0.0768 # 2 accuracy - speed nw_high 0.246 0.0547 0.442

Because we have the samples in a convenient form, we could now evaluate whether there is any evidence for a drift rate difference between conditions for both, word and non-word stimuli. One problem for this is, however, that the direction of the effect differs between words and non-words. This is a consequence from the fact that word stimuli require a response at the lower decision boundary and non-words a response at the upper boundary. Consequently, we need to multiply the effect with -1 for one of the conditions. After that, we can take the mean of both conditions. We do this via tidyverse magic and also add the number of values that are aggregated in this way to the table. This is just a precaution to make sure that our logic is correct and we always aggregate exactly two values. As the final check shows, this is the case.

samp2 <- samp1 %>%

mutate(val2 = if_else(frequency == "high", -1*.value, .value)) %>%

group_by(contrast, .draw) %>%

summarise(value = mean(val2),

n = n())

all(samp2$n == 2)

# [1] TRUE

We can then investigate the resulting difference distribution. One way to do so is in a graphical manner via a histogram. As recommended by Hadley Wickham, it makes sense to play around with the number of bins a bit until the figure looks good. Given we have quite a large number of samples, 75 bins seemed good to me. With less bins there was not enough granularity, with more bins I felt there were too many small peaks.

ggplot(samp2, aes(value)) + geom_histogram(bins = 75) + geom_vline(xintercept = 0)

This shows that, whereas quite a bit of the posterior mass is to the right of 0, a non negligible part is still to the left. So there is some evidence for a difference, but it is still not very strong, even when looking at words and non-words together.

We can also investigate this difference distribution via the HPD intervals. To get a better overview we now look at several intervals sizes:

hdrcde::hdr(samp2$value, prob = c(99, 95, 90, 80, 85, 50)) # $`hdr` # [,1] [,2] # 99% -0.1825 0.669 # 95% -0.0669 0.554 # 90% -0.0209 0.505 # 85% 0.0104 0.471 # 80% 0.0333 0.445 # 50% 0.1214 0.340 # # $mode # [1] 0.225 # # $falpha # 1% 5% 10% 15% 20% 50% # 0.116 0.476 0.757 0.984 1.161 1.857

This shows that only for the 85% interval and smaller intervals is 0 excluded. Note, you can use hdrcde::hdr.den instead of hdrcde::hdr to get a graphical overview of the output.

Using Bayesian p-values

An approach that requires less arbitrary cutoffs then HPDs (for which we have to define the width) is to calculate the actual proportion of samples below 0:

mean(samp2$value < 0) # [1] 0.0665

As explained above, if this proportion would be small, this would constitute evidence for a difference. Here, the proportion of samples below 0 is .067. Unfortunately, .067 is a bit above the magical cutoff of .05, which is universally accepted as delineating small from big numbers, or perhaps more appropriately, likely from unlikely probabilities.

Let us look at such a proportion a bit more in depth. If two posterior distributions are lying exactly on top of each other, the resulting difference distribution is centered on 0 and exactly 50% of the difference distribution would be on either side of 0. Thus, a proportion of 50% corresponds to the least evidence for a difference, or alternatively, to the strongest evidence for an absence of a difference. One further consequence is that both, values near 0 and values near 1, are indicative of a difference, albeit in different directions. To make interpretation of these proportions easier, I suggest to always calculate them in such a way that small values represent evidence for a difference (e.g., by subtracting the proportion from 1 if it is above .5).

But what does this proportion tell us exactly? It represents the probability that there is a difference in a specific direction. Thus, it represents one-sided evidence for a difference. In contrast, for a 95% HPD we remove 2.5% from each sides of the difference distribution. To ensure this proportion has the same two-sided property as our HPD intervals, we need to multiply it by 2. A further benefit of this multiplication is that it stretches the range to the whole probability scale (i.e., from 0 to 1).

Thus, the resulting value is a probability (i.e., ranging from 0 to 1), with values near zero denoting evidence for a difference, and values near one provide some evidence against a difference. Thus, in contrast to a classical p-value it is a continuous measure of evidence for (when near 0) or against (when near 1) a difference between the parameter estimates. Given its superficial similarity with classical p-values (i.e., low values are seen as evidence for a difference), we could call this it a version of a Bayesian p-value or pB for short. In the present case we could say: The pB value for a difference between speed and accuracy conditions in drift rate across word and non-word stimuli is .13, indicating that the evidence for a difference is at best weak.

Bayesian p-values of course allows us to misuse them in the same way that we can misuse classical p-values. For example, by introducing arbitrary cutoff values, such as at .05. Imagine for a second that we are interested in testing whether there are differences in the absolute amount of evidence as measured via drift rate for any of the four cells of the design (I am not suggesting that is particularly sensible). For this, we would have to transform the posterior for all drift rates onto the same side (note, we do not want to take the absolute values as we still want to retain the information of switching from positive to negative drift rates or the other way around). For example, by multiplying the drift rate for words by -1. We do so and then inspect the cell means.

samp3 <- fit_wiener %>%

emmeans( ~ condition*frequency) %>%

gather_emmeans_draws() %>%

mutate(.value = if_else(frequency == "high", -1 * .value, .value),

intera = paste(condition, frequency, sep = "."))

samp3 %>%

mode_hdi(.value)

# # A tibble: 4 x 8

# # Groups: condition [2]

# condition frequency .value .lower .upper .width .point .interval

# <fct> <fct> <dbl> <dbl> <dbl> <dbl> <chr> <chr>

# 1 accuracy high 2.97 2.56 3.34 0.95 mode hdi

# 2 accuracy nw_high 2.25 1.96 2.50 0.95 mode hdi

# 3 speed high 2.76 2.28 3.10 0.95 mode hdi

# 4 speed nw_high 2.00 1.64 2.34 0.95 mode hdi

Inspection of the four cell means suggests that drift rate values for words are larger then the values for non-words.

To get an overview of all pairwise differences using an arbitrary cut-off value, I have written two functions that returns a compact letter display of all pairwise comparisons. The functions require the data in the wide format, with each column representing the draws for one parameter. Note that the compact letter display is calculated via another package, multcompView, which needs to be installed before using these functions.

get_p_matrix <- function(df, only_low = TRUE) {

# pre-define matrix

out <- matrix(-1, nrow = ncol(df), ncol = ncol(df), dimnames = list(colnames(df), colnames(df)))

for (i in seq_len(ncol(df))) {

for (j in seq_len(ncol(df))) {

out[i, j] <- mean(df[,i] < df[,j])

}

}

if (only_low) out[out > .5] <- 1- out[out > .5]

out

}

cld_pmatrix <- function(model, pars, level = 0.05) {

p_matrix <- get_p_matrix(model)

lp_matrix <- (p_matrix < (level/2) | p_matrix > (1-(level/2)))

cld <- multcompView::multcompLetters(lp_matrix)$Letters

cld

}

samp3 %>%

ungroup() %>% ## to get rid of unneeded columns

select(.value, intera, .draw) %>%

spread(intera, .value) %>%

select(-.draw) %>% ## we need to get rid of all columns not containing draws

cld_pmatrix()

# accuracy.high accuracy.nw_high speed.high speed.nw_high

# "a" "b" "a" "b"

In a compact letter display, conditions that share a common letter do not differ according to the criterion. Conditions that do not share a common letter do differ according to the criterion. Here, the compact letter display is not super informative and just recovers what we have seen above. The drift rates for the words form one group and the drift rates for the non-words form another group. In cases with more conditions or more complicated difference pattern compact letter displays can be quite informative.

We could have also used the functionality of tidybayes to inspect all pairwise comparisons. Note that it is important to use ungroup before invoking the compare_levels function. Otherwise we get an error that is difficult to understand (the grouping appears to be a consequence of using emmeans).

samp3 %>% ungroup %>% compare_levels(.value, by = intera) %>% mode_hdi() # # A tibble: 6 x 7 # intera .value .lower .upper .width .point .interval # <fct> <dbl> <dbl> <dbl> <dbl> <chr> <chr> # 1 accuracy.nw_high - accuracy.high -0.715 -1.09 -0.351 0.95 mode hdi # 2 speed.high - accuracy.high -0.190 -0.696 0.256 0.95 mode hdi # 3 speed.nw_high - accuracy.high -0.946 -1.46 -0.526 0.95 mode hdi # 4 speed.high - accuracy.nw_high 0.488 0.0879 0.876 0.95 mode hdi # 5 speed.nw_high - accuracy.nw_high -0.252 -0.550 0.0647 0.95 mode hdi # 6 speed.nw_high - speed.high -0.741 -1.12 -0.309 0.95 mode hdi

Differences in Other Parameters

As discussed above, to look at the differences in the other parameter we apparently cannot use emmeans anymore. Luckily, tidybayes still offers the possibility to extract the posterior samples in a tidy way using either gather_draws or spread_draws. It appears that for either of those you need to pass the specific variable names you want to extract. We get them via get_variables:

get_variables(fit_wiener)[1:10] # [1] "b_conditionaccuracy:frequencyhigh" "b_conditionspeed:frequencyhigh" # [3] "b_conditionaccuracy:frequencynw_high" "b_conditionspeed:frequencynw_high" # [5] "b_bs_conditionaccuracy" "b_bs_conditionspeed" # [7] "b_ndt_conditionaccuracy" "b_ndt_conditionspeed" # [9] "b_bias_conditionaccuracy" "b_bias_conditionspeed"

Boundary Separation

We will use spread_draws to analyze the boundary separation. First we extract the draws and then immediately calculate the difference distribution between both.

samp_bs <- fit_wiener %>% spread_draws(b_bs_conditionaccuracy, b_bs_conditionspeed) %>% mutate(bs_diff = b_bs_conditionaccuracy - b_bs_conditionspeed) samp_bs # # A tibble: 2,000 x 6 # .chain .iteration .draw b_bs_conditionaccuracy b_bs_conditionspeed bs_diff # <int> <int> <int> <dbl> <dbl> <dbl> # 1 1 1 1 1.73 1.48 0.250 # 2 1 2 2 1.82 1.41 0.411 # 3 1 3 3 1.80 1.28 0.514 # 4 1 4 4 1.85 1.42 0.424 # 5 1 5 5 1.86 1.37 0.493 # 6 1 6 6 1.81 1.36 0.450 # 7 1 7 7 1.67 1.34 0.322 # 8 1 8 8 1.90 1.47 0.424 # 9 1 9 9 1.99 1.20 0.790 # 10 1 10 10 1.76 1.19 0.569 # # ... with 1,990 more rows

Now we can of course use the same tools as above. For example, look at the histogram. Here, I again chose 75 bins.

samp_bs %>% ggplot(aes(bs_diff)) + geom_histogram(bins = 75) + geom_vline(xintercept = 0)

The histogram reveals pretty convincing evidence for a difference. It appears as if only two samples are below 0. We confirm this suspicion and then calculate the Bayesian p-value. As it turns out, is is also extremely small.

sum(samp_bs$bs_diff < 0) # [1] 2 mean(samp_bs$bs_diff < 0) *2 # [1] 0.002

All in all we can be pretty confident that manipulating speed versus accuracy conditions affects the boundary separation in the current data set. Exactly as expected.

Non-Decision Time

For assessing differences in the non-decision time, we use gather_draws. One benefit of this function compared to spread_draws is that it makes it easy to obtain the marginal estimates. As already said above, the HPD interval overlap only very little suggesting that there is a difference between the conditions. We save the resulting marginal estimates for later in a new data.frame called ndt_mean.

samp_ndt <- fit_wiener %>% gather_draws(b_ndt_conditionaccuracy, b_ndt_conditionspeed) (ndt_mean <- samp_ndt %>% median_hdi()) # # A tibble: 2 x 7 # .variable .value .lower .upper .width .point .interval # <chr> <dbl> <dbl> <dbl> <dbl> <chr> <chr> # 1 b_ndt_conditionaccuracy 0.323 0.293 0.362 0.95 median hdi # 2 b_ndt_conditionspeed 0.262 0.235 0.295 0.95 median hdi

To evaluate the difference, the easiest approach to me seems again to spread the two variables across rows and then calculate the difference (i.e., similar to starting with spread_draws in the first place). We can then again plot the resulting difference distribution.

samp_ndt2 <- samp_ndt %>% spread(.variable, .value) %>% mutate(ndt_diff = b_ndt_conditionaccuracy - b_ndt_conditionspeed) samp_ndt2 %>% ggplot(aes(ndt_diff)) + geom_histogram(bins = 75) + geom_vline(xintercept = 0)

As previously speculated, there appears to be strong evidence for a difference. We can further confirm this via the Bayesian p-value:

mean(samp_ndt2$ndt_diff < 0) * 2 # [1] 0.005

So far this looks as if we found another clear difference in parameter estimates due to the manipulation. But this conclusion would be premature. In fact, investigating the non-decision time from the 4-parameter Wiener model estimated in this way is completely misleading. Instead of capturing a meaningful feature of the response time distribution, the non-decision time parameter is only sensitive to very few data points. Specifically, the non-decision time basically only reflects a specific feature of the distribution of minimum response times per participant and per condition or cell for which it is estimated. I will demonstrate this in the following for our example data.

We first need to load the data in the same manner as in the previous posts. We then calculate the minimum RTs per participant and condition.

data(speed_acc, package = "rtdists")

speed_acc <- droplevels(speed_acc[!speed_acc$censor,]) # remove extreme RTs

speed_acc <- droplevels(speed_acc[ speed_acc$frequency %in%

c("high", "nw_high"),])

min_val <- speed_acc %>%

group_by(condition, id) %>%

summarise(min = min(rt))

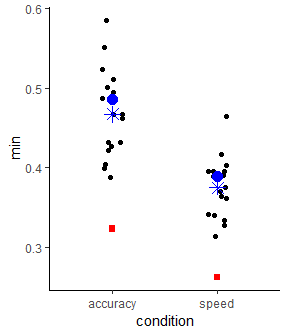

To investigate the problem, we want to graphically compare the the distribution of minimum RTs with the estimates for the non-decision time. For this, we need to add a condition column with matching condition names to the ndt_mean data.frame created above. Then, we can plot both into the same plot. We also add several summary statistics regarding the distribution of individual minimum RTs. Specifically, the black points show the individual minimum RTs for each of the two conditions; the blue + shows the median and the blue x the mean of the individual minimum RTs; the blue circle shows the midpoint between the largest and smallest value of the minimum RT distributions; the red square shows the point estimate of the non-decision time parameter with corresponding 95% HPD intervals.

ndt_mean$condition <- c("accuracy", "speed")

ggplot(min_val, aes(x = condition, y = min)) +

geom_jitter(width = 0.1) +

geom_pointrange(data = ndt_mean,

aes(y = .value, ymin = .lower, ymax = .upper),

shape = 15, size = 1, color = "red") +

stat_summary(col = "blue", size = 3.5, shape = 3,

fun.y = "median", geom = "point") +

stat_summary(col = "blue", size = 3.5, shape = 4,

fun.y = "mean", geom = "point") +

stat_summary(col = "blue", size = 3.5, shape = 16,

fun.y = function(x) (min(x) + max(x))/2,

geom = "point")

What this graph rather impressively shows is that the estimate of the non-decision time almost perfectly matches the midpoint between largest and smallest minimum RT (i.e., the blue dot). Let us put this in perspective by comparing the number of minimum data points (i.e., the number of participants) to the number of total data points.

speed_acc %>% group_by(condition) %>% summarise(n()) # # A tibble: 2 x 2 # condition `n()` # <fct> <int> # 1 accuracy 5221 # 2 speed 5241 length(unique(speed_acc$id)) # [1] 17 17 / 5000 # [1] 0.0034

This shows that the non-decision time parameter, one of only four model parameters, is essentially completely determined by less than .5% of the data. If any of these minimum RTs is an outlier (which at least in the accuracy condition seems likely) a single response time can have an immense influence on the parameter estimate. In other words, it can hardly be assumed that with the current implementation the non-decision time parameter reflects an actual latent process. Instead, it simply reflects the midpoint between smallest and largest minimum RT per participant and condition, slightly weighted toward the mass of the distribution of minimum RTs. This parameter estimate should not be used to draw substantive conclusions.

In the present case, this confound does not appear to be too consequential. If only one of the data points in the accuracy condition is an outlier and the other data points are faithful representatives of the leading edge of the response time distribution (which is essentially what the non-decision time is supposed to capture), the current parameter estimates underestimate the true difference. Using a more robust ad-hoc measure of the leading edge, specifically the 10% trimmed mean of the 40 fastest RTs per participant and condition plotted below, further supports this conclusion. This graph also does not contain any clear outliers anymore. For reference, the non-decision time estimates are still included. Nevertheless, having a parameter be essentially driven by very few data points seems completely at odds with the general idea of cognitive modeling and the interpretation of non-decision times obtained with such a model cannot be recommended.

min_val2 <- speed_acc %>%

group_by(condition, id) %>%

summarise(min = mean(sort(rt)[1:40], trim = 0.1))

ggplot(min_val2, aes(x = condition, y = min)) +

geom_jitter(width = 0.1) +

stat_summary(col = "blue", size = 3.5, shape = 3,

fun.y = "median", geom = "point") +

stat_summary(col = "blue", size = 3.5, shape = 4,

fun.y = "mean", geom = "point") +

stat_summary(col = "blue", size = 3.5, shape = 16,

fun.y = function(x) (min(x) + max(x))/2,

geom = "point") +

geom_point(data = ndt_mean, aes(y = .value), shape = 15,

size = 2, color = "red")

It is important to note that this confound does not hold for all implementations of the diffusion model, but is specific to the 4-parameter Wiener model as implemented here. There are solutions for avoiding this problem, two of which I want to list here. First, one could add across trial variability in the non-decision time. This variability is often assumed to come a uniform distribution which can capture outliers at the leading edge of the response time distribution. Second, instead of only fitting a diffusion model one could assume that some of the responses are contaminants coming from a different process, for example random responses from a uniform distribution ranging from the absolute minimum to maximum RT. Technically, this would consitute a mixture model between the diffusion process and a uniform distribution with either a free or fixed mixture/contamination rate (e.g., ). It should be relatively easy to implement such a mixture model via a custom_family in brms and I hope to find the time to do that at some later point.

I am of course not the first one to discover this behavior of the 4-parameter Wiener model (see e.g., ). However, this problem seems especially prevalent in a Bayesian setting as the 4-parameter model variant is readily available and model variants appropriately dealing with this problem are not. Some time ago I asked Chris Donkin and Greg Cox what they thought would be the best way to address this issue and the one thing I remember from this discussion was Chris’ remark that, when he uses the 4-parameter Wiener model, he simply ignores the non-decision time parameter. That still seems like the best course of action to me.

I hope there are not too many papers out there that use the 4-parameter model in such a way and interpret differences in the non-decision time parameter. If you know of one, I would be interested to learn about it. Either write me a mail or post it in the comments below.

Starting Point / Bias

Finally, we can take a look at the starting point or bias. We do this again using spread_draws and then plot the resulting difference distribution.

samp_bias <- fit_wiener %>% spread_draws(b_bias_conditionaccuracy, b_bias_conditionspeed) %>% mutate(bias_diff = b_bias_conditionaccuracy - b_bias_conditionspeed) samp_bias %>% ggplot(aes(bias_diff)) + geom_histogram(bins = 100) + geom_vline(xintercept = 0)

The difference distributions suggests there might be a difference. Consequently, we calculate the Bayesian p-value next. Note that we calculate the difference in the other direction this time so that evidence for a difference is represented by small values.

mean(samp_bias$bias_diff > 0) *2 # [1] 0.046

We get lucky and our Bayesian p-value is just below .05, encouraging us to believe that the difference is real. To round this up, we again take a look at the estimates:

fit_wiener %>% gather_draws(b_bias_conditionaccuracy, b_bias_conditionspeed) %>% summarise(hdi = get_hdi(.value, level = 80)) %>% unnest # # A tibble: 2 x 4 # .variable mode lower upper # <chr> <dbl> <dbl> <dbl> # 1 b_bias_conditionaccuracy 0.470 0.457 0.484 # 2 b_bias_conditionspeed 0.498 0.484 0.516

Together with the evidence for a difference we can now postulate in a more confident manner that for the accuracy condition there is a bias toward the lower boundary and the “word” responses, whereas evidence accumulation starts unbiased in the speed condition.

Closing Words

This third part wraps up a the most important steps in a diffusion model analysis with brms. Part I shows how to setup the model, Part II shows how to evaluate the adequacy of the model, and the present Part III shows how to inspect the parameter and test hypotheses about them.

As I have mentioned quite a bit throughout these parts, the model used here is not the full diffusion model, but the 4-parameter Wiener model. Whereas this makes estimation possible in the first place, it comes with a few problems. One of them was discussed at length in the present part. The estimate of the non-decision time parameter essentially captures a feature of the distribution of minimum RTs. If these are contaminated by responses that cannot be assumed to come from the same process as the other responses (which I believe a priori to be quite likely), the estimate becomes rather meaningless. My take away from this is that I would not interpret these estimates at all. I feel that the dangers outweigh the benefits by far.

Another feature of the 4-parameter Wiener model is that, in the absence of a bias for any of the response options, it predicts equal mean response times for correct and error responses. This is perhaps the main theoretical constraint which has led to the development of many of the more highly parameterized model variants, such as the full (i.e., 7-parameter) diffusion model. An overview of this issue can, for example, be found in . They write (p. 335):

Depending on the experimental manipulation, RTs for errors are sometimes shorter than RTs for correct responses, sometimes longer, and sometimes there is a crossover in which errors are slower than correct responses when accuracy is low and faster than correct responses when accuracy is high. The models must be capable of capturing all these aspects of a data set.

For the present data we find a specific pattern that is often seen as typical. As shown below, error RTs are quite a bit slower than correct RTs in the accuracy condition. This effect cannot be found in the speed condition where, if anything, error RTs are faster than correct RTs.

speed_acc %>%

mutate(correct = stim_cat == response) %>%

group_by(condition, correct, id) %>%

summarise(mean = mean(rt),

se = mean(rt)/sqrt(n())) %>%

summarise(mean = mean(mean),

se = mean(se))

# # A tibble: 4 x 4

# # Groups: condition [?]

# condition correct mean se

# <fct> <lgl> <dbl> <dbl>

# 1 accuracy FALSE 0.751 0.339

# 2 accuracy TRUE 0.693 0.0409

# 3 speed FALSE 0.491 0.103

# 4 speed TRUE 0.513 0.0314

Given this difference in the relative speeds of correct and error responses in the accuracy condition, it may seem unsurprising that the accuracy condition is also the one in which we have a measurable bias. Specifically, a bias towards the word responses. However, as can be seen by adding stim_cat into the group_by call above, the difference in the relative error rate is particularly strong for non-words where a bias toward words should lead to faster errors. Thus, it appears that some of the more subtle effects in the data are not fully accounted for in the current model variant.

The canonical way for dealing with differences in the relative speed of errors in diffusion modeling is via across-trial variabilities in the model parameters (see ). Variability in the starting point (introduced by Laming, 1968) allows errors RTs to be faster than correct RTs. Variability in the drift rate (introduced by ) allows error RTs to be slower than correct RTs. (As discussed above, variability in the non-decision time allows its parameter estimates to be less influenced by contaminates or individual outliers.) However, as described below, introducing these variabilities in a Bayesian framework comes with its own problems. Furthermore, there is a recent discussion of the value of these variabilities from a measurement standpoint.

Possible Future Extensions

Whereas this series comes to an end here, there are a few further things that seem either important, interesting, or viable. Maybe I will have some time in the future to talk about these as well, but I suggest to not expect those soon.

- One important thing we have not yet looked at is the estimates of the group-level parameters (i.e., standard deviations and correlations). They may contain important information about the specific data set and research question, but also about the tradeoffs of the model parameters.

-

Replacing the pure Wiener process with a mixture between a Wiener and a uniform distribution to be able to interpret the non-decision time. As written above, this should be doable with a

custom_familyinbrms. -

As described above, one of the driving forces for modern response time models, such as the 7-parameter diffusion model, were differences in the relative speed of error and correct RTs. These are usually explained via variabilities in the model parameters. One relatively straight forward way to implement these variabilities in a Bayesian setting would be via the hierarchical structure. For example, each participant gets a by-trial random intercept for the drift rate,

+ (0+id||trial)(the double bar notation should ensure that these are uncorrelated across participants). Whereas this sounds conceptually simple, I doubt such a model will converge in a reasonable timeframe. Furthermore, as shown by , a model in which the shape of the variability distribution is essentially unconstrained (as is the case when only constraining it via the prior as suggested here) is not testable. The model becomes unfalsifiable as it can predict any data pattern. Given the importance of this approach from a theoretical point of view it nevertheless seems to be an extremely important angle to explore. -

Fitting the Wiener model takes quite a lot of time. It would be interesting to compare the fit using full Bayesian inference (i.e., sampling as done here) with variational Bayes (i.e., parametric approximation of the posterior), which is also implemented in

Stan. I expect that it does not work that well, but the comparison would still be interesting. Recently, diagnostics for variational Bayes were introduced. -

The diffusion model is of course only one model for response time data. A popular alternative is the LBA. I know there are some implementations in

Stanout there, so if they could be accessed viabrms, this would be quite interesting.

The RMarkdown file for this post is available here.

[…] second part shows how to perform model diagnostics and how to asses the model fit. The third part shows how to test for differences in parameters between […]

[…] between parameters as a function of the speed-accuracy condition. This is the topic of the third blog post. I am hopeful it will not take two months this […]